The Age of the AI Agent

The investment management industry stands at an evolutionary crossroads in its adoption of Artificial Intelligence (AI). AI agents are increasingly used in the daily workflows of portfolio managers, analysts, and compliance officers, yet most firms cannot precisely describe the type of “intelligence” they have deployed.

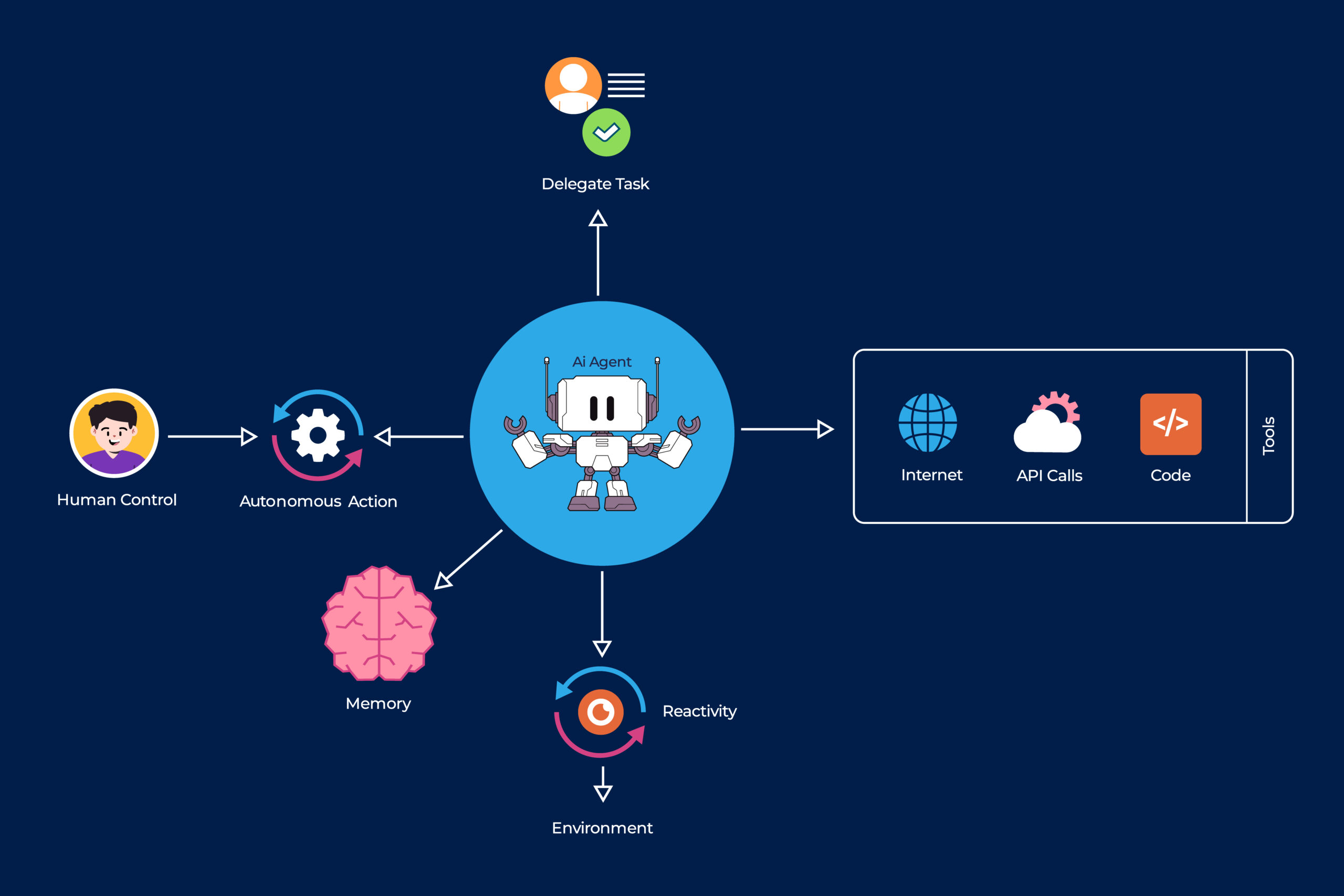

Agentic AI (or AI agent) takes large language models (LLMs) many steps further than widely used models such as ChatGPT. This is not about just asking a question and getting a response. Agentic AI can observe, analyze, decide, and sometimes act on behalf of a human within defined boundaries. Investment firms need to decide: Is it a decision-support tool, an autonomous research analyst, or a delegated trader?

Each AI adoption and implementation presents an opportunity to set boundaries and ring-fence the tools. If you cannot classify your AI, you cannot govern it, and you certainly cannot scale it. To that end, our research team, a collaboration between DePaul University and Panthera Solutions, developed a multi-dimensional classification system for AI agents in investment management. This article is an excerpt from an academic paper, “A Multi-Dimensional Classification System For AI Agents In The Investment Industry,” which was recently submitted to a peer reviewed journal.

This system provides practitioners, boards, and regulators with a common language for evaluating agentic systems based on autonomy, function, learning capability, and governance. Investment leaders will gain an understanding of the steps needed to design an AI taxonomy and create a framework for mapping AI agents deployed at their firms.

Without a shared taxonomy, we risk both over-trusting and under-utilizing a technology that is already reshaping how capital is allocated, which can lead to further complications down the road.

Why a Taxonomy Matters

AI taxonomy should not constrain innovation. If carefully designed, it should allow firms to articulate the problem the agent solves, who is accountable, and how model risk is mitigated. Without such clarity, AI adoption remains tactical rather than strategic.

Investment managers today treat AI in two ways: solely as a functional set of tools or as a systemic integrated piece of the investment decision process.

The functional approach includes using AI for risk scoring, natural language processors for sentiment extraction, and co-pilots that summarize portfolio exposures. This improves efficiency and consistency but leaves the core decision architecture unchanged. The organization remains human-centric, with AI serving as a peripheral enhancer.

A smaller but growing number of firms are pursuing the systemic route. They integrate AI agents into the investment design process as adaptive participants rather than auxiliary tools. Here, autonomy, learning capacity, and governance are explicitly defined. The firm becomes a decision ecosystem, where human judgment and machine reasoning co-exist and co-evolve.

This distinction is critical. Function-driven adoption results in faster tools, but systemic adoption creates smarter organizations. Both can co-exist but only the latter yields a sustained comparative advantage.

Intelligent Integration

Neuroscientist Antonio Damasio reminded us that all intelligence strives for homeostasis, balance with its environment. Financial markets are complex adaptive systems (Lo, 2009) and, so too, must maintain equilibrium, between data and judgment, automation and accountability, profit and planetary stability. A smart AI framework would reflect that ecology by mapping AI agents along three orthogonal dimensions:

First, consider the Investment Process: Where in the value chain does the agent operate?

Typically, an investment process comprises five stages—idea generation, assessment, decision, execution, and monitoring—which are then embedded in compliance and stakeholder reporting workflows. AI agents can augment any stage, but decision rights must remain proportional to interpretability (Figure 1).

Figure 1.

Mapping agents to the five stages below (Figure 1) clarifies accountability and prevents governance blind spots.

Idea Generation: Perception-layer agents such as RavenPack transform unstructured text into sentiment scores and event features.

Idea Assessment: Co-pilots like BlackRock Aladdin Co-pilot surface portfolio exposures and scenario summaries, accelerating insight without removing human sign-off.

Decision Point: Decision Intelligence systems, (as exemplified by Panthera’s Decision GPS schematic above) are designed to build risk–return asymmetries grounded in the most relevant and validated evidence, with the aim of optimizing decision quality.

Execution: Algorithmic-trading agents act within explicit risk budgets under conditional autonomy and continuous supervision.

Monitoring: Agentic AI autonomously tracks portfolio exposures and identifies emerging risks.

In addition to these five stages, this schematic can improve Compliance and Stakeholder Reporting. AI agents can perform pattern-recognition and flag breaches as well as translate complex performance data into narrative outputs for clients and regulators.

Second, look at Comparative Advantage: Which competitive edge does it enhance: informational, analytical, or behavioral?

AI does not create Alpha, but it could amplify an existing edge. One method of mapping taxonomy is to distinguish among three archetypes (Figure 2):

Informational Advantage: Superior access or speed of data. Short-lived and easily commoditized.

Analytical Advantage: Superior synthesis and inference. Requires proprietary expertise; defensible but time-decaying.

Behavioral Advantage: Superior discipline in exploiting others’ biases or avoiding your own.

Figure 2

Strategic alignment means matching an agent type to a specific investor/firm skill set. For example, a quant house may deploy reinforcement learning for greater analytical depth, while a discretionary firm may use co-pilots to monitor reasoning quality and preserve behavioral discipline.

Third, evaluate the Complexity Range: Under what degree of uncertainty does it function: from measurable risk to radical ambiguity?

Markets oscillate between risk and uncertainty. Extending Knight’s and Taleb’s typologies, we distinguish four operative regimes.

Figure 3

Governance: From Ethics to Evidence

Forthcoming regulations, such as the EU AI Act and the OECD Framework for the Classification of AI Systems, will codify explainability and accountability. A taxonomy that links these mandates to practical governance levers would be considered best practice. A classification matrix then becomes both a risk-control system and a strategic compass.

Strategic Implications for CIOs

Finance’s adaptive nature demands augmented intelligence and systems designed to extend human adaptability, not replace it. Humans contribute contextual judgment, ethical reasoning, and sense-making; agents contribute scale, speed, and consistency. Together, they enhance decision quality, the ultimate KPI in investment management.

Firms that design around decision architecture, not algorithms, will compound their advantage.

Therefore:

Map your ecosystem: Catalogue AI agents and plot them within the framework to expose overlaps and blind spots.

Prioritize comparative advantage: Invest where AI strengthens existing advantages.

Institutionalize learning loops: Treat each deployment as an adaptive experiment; measure impact on decision quality, not headline efficiency.

In Practice

Augmented intelligence, properly classified and governed, allows capital allocation to become not only faster but wiser, learning as it allocates. So, classify before you scale. Align before you automate. And remember, in decision quality, design beats luck.